The Institute of Electrical and Electronics Engineers (IEEE) and the American Chemical Society (ACS) have introduced new fees targeting authors who exercise their right to self-archive accepted manuscripts under a CC BY license. cOAlition S opposes these charges because they penalize authors for complying with open access policies.

This blog post by Bodo Stern (Chief of Strategic Initiatives at the Howard Hughes Medical Institute) and Rachel Bruce (Head of Open Research Strategy, UK Research and Innovation) proposes an alternative: replace these rights-infringing fees with a transparent, service-based model. Would such a fee-for-service model for appraisal services be a better way forward?

Let’s open the conversation.

Earlier this year, the Institute of Electrical and Electronics Engineers (IEEE) introduced a new Repository License Fee, which charges authors for practicing a form of ‘green’ open access by self-archiving their author accepted manuscripts with an open license (CC BY). In 2023, the American Chemical Society (ACS) implemented a similar charge called the Article Development Charge. Both fees purport to offer an alternative compliance path for authors whose funders require open access under a CC BY license. The traditional path – ‘gold’ open access – requires authors to pay an article processing charge (APC) for open access publication in the journal.

ACS and IEEE justify these new charges as covering what ACS calls “pre-acceptance services”, which include managing peer review, supporting manuscript development, and performing other quality checks. Below, we use a more neutral term, appraisal services, which implies neither endorsement nor curation.

The new fees are a response to a growing reality: funders and institutions that promote open access are emphasizing authors’ rights to share their accepted manuscripts openly and immediately on repositories. The cOAlition S rights retention strategy, for example, secures the rights of cOAlition S-funded authors to self-archive manuscripts under a CC BY license in designated repositories to comply with the open access policy of cOAlition S, Plan S. Since repository deposition is cost-free for authors, they can, in principle, fulfill open access mandates without paying APCs. Faced with this shift, it seems ACS and IEEE have decided that if they can’t stop authors from legally sharing manuscripts, they can at least charge them for doing so.

These fees are not structured to cover a legitimate service—they are a penalty for authors who intend to exercise a right they already have.

Others have already laid out why these fees are problematic and why funders and institutions are unlikely to cover them (see cOAlition S blog and COAR blog). In essence, the issue is simple: these fees are not structured to cover a legitimate service—they are a penalty for authors who intend to exercise a right they already have.

Turning a Bad Idea into a Good One

Treating an author’s inherent right as a billable item is a missed opportunity. A thoughtfully designed and transparent fee-for-service model for appraisal services could offer a viable and even innovative alternative—provided it is applied equitably rather than selectively and does not seek to exploit rights authors already possess.

Let’s take peer review as an example. Both ACS and IEEE cite it as an undercompensated service when authors choose to post an author accepted manuscript with a CC BY license. If peer review were offered as a standalone appraisal service, it could be funded in a more principled and inclusive way that does not interfere with green open access. But for this to work, every author who uses the service must be treated equally—fees cannot be selectively applied only to those who later choose to deposit their manuscripts in repositories.

A fee-for-service model also demands accountability. Pointing to an author accepted manuscript or a version of record as evidence of peer review is insufficient—these are indirect and often opaque markers of the process. Instead, the actual outputs of peer review—the reviewer reports and author responses—should serve as the public record of the service provided. These documents offer the most direct evidence of both the labor involved and the scholarly value added.

Not all appraisal services demand this level of transparency. For example, feedback and assessments that take place before a manuscript’s public debut—say, before it appears on a preprint server—can reasonably remain confidential. But organized peer review occupies a distinct role: it surfaces scholarly discourse around an article—including critical commentary and divergent views—which are part of the intellectual scaffolding that supports further inquiry. To support cumulative, transparent scholarship, peer review should be visible and citable, alongside the work it evaluates.

A fee-for-service model would enable journals to receive fair compensation for appraisal services while allowing authors to meet open access mandates through self-archiving on repositories.

A fee-for-service model offers a practical solution to the long-standing tension between green open access and traditional journal economics. It enables journals to receive fair compensation for appraisal services—such as coordinating peer review—while allowing authors to meet open access mandates through self-archiving on repositories.

Who Should Pay?

This fee-for-service model disentangles two core functions that current business models conflate: author-facing appraisal and reader-facing curation. Because appraisal is initiated by authors, it is reasonable for them or their institutions to cover its cost, while readers fund curation, for example through subscription paywalls. This arrangement improves on today’s APCs and paywalls in several ways: Appraisal fees, tied to service execution rather than manuscript acceptance, would be lower and less conflict-prone than APCs. APCs are inflated because accepted authors cross-subsidize rejected submissions, and they are conflict-prone since authors pay for a selective endorsement in which they have a vested interest. Meanwhile, paywalls in this model would apply only to journal content, while green open access ensures that peer-reviewed research remains freely available in repositories.

Nonetheless, any system in which authors or readers bear direct costs risks perpetuating inequities—whether by imposing pay-to-play barriers on underfunded researchers or restricting reader access to curated content. Moving toward collective or institutional funding could mitigate these risks while sustaining both fairness and openness in appraisal and curation.

We must say ‘no’ to opportunistic fees that penalize authors for asserting their rights—and ‘yes’ to fair, transparent, and verifiable service fees

To accelerate the shift to full and equitable open access, we must say ‘no’ to opportunistic fees that penalize authors for asserting their rights—and ‘yes’ to fair, transparent, and verifiable service fees that reflect the real value publishers provide.

“Yes” to Transparent Service Fees, “No” to Fees That Charge Authors to Exercise Their Rights Share on X

Related articles

- Repository License Fee. A New Compliance Option for Authors to Meet Funder Mandates

- ACS Publications provides a new option to support zero-embargo green open access

- American Chemical Society (ACS) and authors’ rights retention

- Unfair publisher fees for deposit into repositories highlight the need for authors to exercise their rights

The Web of Science, a major commercial indexing service of scientific journals operated by Clarivate, recently decided to remove eLife from its Science Citation Index Expanded (SCIE). eLife will only be partially indexed in Web of Science as part of its Emerging Sources Citation Index (ESCI). The justification offered for this decision is that eLife’s innovative publishing model was deemed to conflict with the Web of Science’s standards for assuring quality. This blog post argues that this decision works against the interest of science and will ultimately harm innovation and transparency in scientific research.

If we want to reap the full benefits of 21st-century science, scholarly communication needs reform and innovation[1]. That’s why the Howard Hughes Medical Institute (HHMI) and like-minded research organizations are supporting new approaches to scientific publishing. eLife, founded by HHMI, Wellcome, and the Max Planck Society in 2012, has delivered such innovations since its inception. In its first major contribution, eLife improved peer review by embedding consultation among peer reviewers into the process. Given this record, it is troubling that Web of Science recently decided to de-list eLife from its major index, especially considering that its publicly stated criteria for journal evaluation include the requirement that “published content must reflect adequate and effective peer review and/or editorial oversight”. Rather than helping move scholarly communication forward, Web of Science, by punishing a leader in the field, is in fact holding it back.

The decision

In 2023, eLife adopted an ambitious new publishing model, referred to as “Publish, Review, Curate”, in which research findings are first published as preprints and subsequent peer review reports and editorial assessments are published alongside the original preprints and revised articles.

Web of Science de-listed eLife from SCIE because the new publishing model can result in the publication of studies with “inadequate” or “incomplete” evidence. According to Clarivate eLife failed “to put effective measures in place to prevent the publication of compromised content”. As one of the people who supported the implementation of eLife’s new model, I worry that this decision penalizes the adoption of innovative publishing models that experiment with more transparent and useful forms of peer review.

What makes Clarivate’s decision so counterproductive is that other journals, which use the traditional and confidential peer review model, remain indexed in the Web of Science even though some of the articles they publish also have inadequate or incomplete evidence. The difference is that they do not label these articles as such.

The decision therefore rewards journals for continuing the unhelpful practice of keeping peer review information hidden and unintentionally presenting incomplete and inadequate studies as sound science and punishes those journals that are more transparent.

Clarivate's decision rewards journals for continuing the unhelpful practice of hiding peer review information and unintentionally presenting incomplete and inadequate studies as sound science and punishes those journals that are more… Share on XBeyond Pass/Fail Peer Review

Traditional journals use the editorial decision to publish as the signal to their readers that the article has passed peer review and the journal’s standard of sound science.

As a proxy, the decision to publish can only transmit one piece of information: approval. In contrast, eLife uses editorial assessments, produced collaboratively by eLife reviewers and published with the article, as a proxy for the peer review process. Rather than being a one-dimensional stamp of approval, eLife editorial assessments summarize strengths and weaknesses and use standard terms to rank articles on two dimensions: significance and strength of evidence. For example, the ranked standard terms for strength of evidence range from “inadequate” and “incomplete” at the low end to “compelling” and “exceptional” at the high end.

As a result, eLife does indeed occasionally publish articles that eLife reviewers judge and mark as having “inadequate” or “incomplete” evidence after final revisions, although these represent only a small fraction of the total articles published.

eLife’s model thus makes visible a reality of scientific publishing that is currently invisible.

Publishing articles with incomplete or inadequate evidence

So, how do traditional journals end up publishing studies with incomplete or inadequate evidence?

As a former editor myself, I know that editors will sometimes publish incomplete studies in the belief that these articles include important information in their respective fields that others will improve and build on. The problem isn’t the publication of such studies but the non-transparent stamp of approval which conveys a strength of evidence that simply isn’t there. Clarivate’s decision signals to journals that they can continue to give readers this (false) impression that the evidence for all their articles is better than incomplete. By contrast, the eLife model allows for the sharing of these types of studies, but with the context of an evaluation of the strength of the evidence.

The case for articles with inadequate evidence is different.

Journal editors certainly try to reject inadequate articles or compel authors to revise such articles in light of serious criticism. While these efforts seem appropriate and well-meaning at the level of individual journals, they break down at the level of the publishing system. Authors can simply submit an article rejected at one journal to another journal without note, where it may ultimately be published. Articles with inadequate evidence thus end up being published without any flag or commentary noting the problem, polluting the scientific literature.

Peer review isn’t foolproof to begin with. Indeed, studies[2] on peer review have shown that expert reviewers often miss flaws that were deliberately inserted into manuscripts by the studies’ designers. Re-discovering these flaws in successive rounds of confidential peer review at different journals makes the task of keeping inadequate articles rejected increasingly harder.

Adding to this problem, the pool of expert reviewers is depleted with every round of review. The inevitable outcome is that inadequate articles are published with the stamp of approval from peer review in journals that remain listed on Web of Science.

Moreover, the confidential nature of peer review at traditional journals makes it hard to grasp the full extent of the problem. It is thus unclear whether these journals publish more or fewer inadequate articles than eLife. But when they do publish those studies, the damage is more serious than at eLife because readers are led to believe that the articles report sound science.

How indexers can drive change

Science needs indexing services that move beyond mere assertions and hold journals accountable for their claims about the quality of articles they review and consider for publication.

At minimum, journals should insist that the prior peer review history of an article is shared with their editors and peer reviewers.

Authors may deserve fresh eyes on their articles, but not without convincing the new reviewers that prior reviewers got it wrong. eLife authors can already take their eLife reviewed preprints to other journals for publication, including preprints that eLife reviewers deemed “inadequate”, but the peer reviews remain publicly accessible at eLife.

Journals that conduct peer review in the open or ensure transfer of prior peer review reports clearly advance adequate and effective peer review and should thus be rewarded, not punished, by indexers.

Indexing services should also implement protocols that scrutinize whether journals effectively correct hyped or flawed publications. Science is error-prone and a journal’s stamp of approval – or even a transparent peer review process such as eLife’s – can get it wrong.

Journals would perhaps do a better job at correcting their published record if these indexing services held them more accountable. eLife’s new publishing model makes it easier to evolve in this direction. For example, editorial assessments can be improved in the future by offering revisions based on expert feedback.

From career threat to badge of honor

When we accept that published assessments can change, reflecting the maturing perception of the published work among experts, we may even overcome the most formidable cultural barrier that transparent peer review faces: the understandable and widespread concern that critical reviews can sink the work and career of the authors. If the authors got it right and the work eventually succeeds in overturning a long-held dogma, initial negative reviews can turn into a badge of honor. They demonstrate, in the written record, what challenges the authors faced on the road to recognition of their work.

Unfortunately, the decision by Clarivate to de-list eLife from SCIE is a barrier to a future where the inevitable errors of authors, peer reviewers and editors can be effectively corrected through expert input that everybody can see and benefit from.

References

[1] Sever R (2023) Biomedical publishing: Past historic, present continuous, future conditional. doi.org/10.1371/journal.pbio.3002234; Stern BM, O’Shea EK (2019) A proposal for the future of scientific publishing in the life sciences. doi.org/10.1371/journal.pbio.3000116

[2] Baxt, William G et al. (1998) Who Reviews the Reviewers? Feasibility of Using a Fictitious Manuscript to Evaluate Peer Reviewer Performance. doi.org/10.1016/S0196-0644(98)70006-X; Godlee F, Gale CR, Martyn CN (1998) Effect on the Quality of Peer Review of Blinding Reviewers and Asking Them to Sign Their Reports: A Randomized Controlled Trial. doi:10.1001/jama.280.3.237; Schroter S, Black N, Evans S, Godlee F, Osorio L, Smith R. (2008) What errors do peer reviewers detect, and does training improve their ability to detect them? doi:10.1258/jrsm.2008.080062

Disclosure

This blog is authored by Bodo Stern, Chief of Strategic Initiatives at the Howard Hughes Medical Institute (HHMI). HHMI is a founding member and major funder of eLife.

Update (28 November)

This blog post has been updated to note that the Web of Science recently decided to remove eLife from its Science Citation Index Expanded (SCIE). eLife will only be partially indexed in Web of Science as part of its Emerging Sources Citation Index (ESCI).

The traditional scientific publication model, characterized by gate-keeping editorial decisions, has come under increasing criticism. Opponents argue that it is too slow, opaque, unfair, lacking in qualifications, dominated by a small group of individuals, inefficient, and even obsolete. In response to these critiques, two alternatives have gained traction: peer-reviewed preprints and the Publish-Review-Curate (PRC) model (Stern & O’Shea, 2019 and Liverpool, 2023). Both models share two common steps:

Step 1: Authors decide when to make their articles publicly available by depositing them as preprints on preprint servers or institutional open archives.

Step 2: These preprints are then formally reviewed by specialized services (such as Review Commons, PREreview, Peer Community In (PCI), etc.), and the reviews are made publicly accessible.

The Publish-Review-Curate model includes an additional step: curation. In the following, we will understand the curation of articles as a selective process leading to the presentation of articles in a collection organised by a journal or another service, a definition close to that of Google’s English dictionary provided by Oxford Languages. Note that some colleagues use a more extended definition for curation, ranging from simple compilation to certification of articles (Corker et al., 2024). Certain curation services (e.g., journals) select and incorporate reviewed preprints into their curated collections, providing them with an added layer of recognition that non-curated preprints do not receive.

Peer-reviewed preprints and the Publish-Review-Curate model are garnering increasing interest from various stakeholders: funders (e.g., cOAlition S), pro-preprint organizations (e.g., ASAPbio), publishers (such as those participating in the ‘Supporting interoperability of preprint peer review metadata’ workshop held on October 17 & 18 at Hinxton Hall, UK, co-organized by Europe PMC and ASAPbio), and even journals (e.g., PCI-friendly journals).

One notable example of an organisation that applies the Publish-Review-Curate (PRC) model is eLife (Hyde et al., 2022). In this model, authors first deposit their preprints and then submit them to eLife for peer review. After a round of reviews and the collection of a publication fee, eLife has, since 2023, removed the traditional accept/reject decisions. Instead, it focuses on public reviews and qualitative editorial assessments of preprints. The preprint is published on eLife’s website as a “Reviewed Preprint,” along with the editorial assessment and public reviews.

Another comparison often made with the PRC model is the Peer Community In (PCI) evaluation process. In PCI, authors publish their articles as preprints on open archives, submit them to a thematic PCI, and undergo one or more rounds of peer review. Afterwards, they receive a final editorial decision (accept/reject). Accepted articles are publicly recommended by PCI, along with peer reviews, editorial decisions, and author responses. PCI’s process mirrors the PRC model, with curation—marked by the preprint’s acceptance and publication of a recommendation text—following peer review. However, PCI only makes reviews public if the article is accepted.

Ambiguities in Peer-Reviewed Preprints and the PRC Model

Two key ambiguities blur the definition of peer-reviewed preprints and the PRC model:

1. Peer review is not necessarily validation

Peer review is often confused with a system of validating or rejecting articles. However, in most peer-reviewed preprint services, no formal decision (validation/non-validation) is made, unlike in traditional journals. A preprint undergoing peer review is not classified as “validated” or “not validated” based solely on reviews. Reviews simply offer critical perspectives, both positive and negative, and it remains up to the reader to interpret them. Additionally, the peer-review process does not inherently guarantee the quality of an article. It offers critical opinions by reviewers but does not provide a definitive validation. It provides the reader with positive and negative critical elements based on a more or less thorough and more or less complete expertise. It is not a validation but the opinion of one or more reviewers on all or part of the article. Readers often cannot draw conclusions about validation based on peer reviews because they are generally complex and lengthy, making them difficult to understand for at least part of the readership. Only experts who take the time to do so have complete expert access to the dialogue between reviewers and authors. In addition, readers often lack the context to judge the quality of reviews, as they are unable to evaluate how reviewers are selected, their expertise on the subject, or their potential conflicts of interest. This opacity contrasts with the role of editors, who do possess this information and make informed decisions on validation.

Consequently, listing, declaring, and marking preprints as “reviewed” will likely give rise to new problems, because readers may not be able to interpret peer reviews correctly and may mistakenly think that ‘reviewed’ means ‘validated’.

Concerning the definition of a peer reviewed preprint, cOAlition S position is interesting. It states that:

“peer reviewed publications' – defined here as scholarly papers that have been subject to a journal-independent standard peer review process with an implicit or explicit validation – are considered by most cOAlition S organisations to be of equivalent merit and status as peer-reviewed publications that are published in a recognised journal or on a platform”

cOAlition S added a note to clarify what is an “implicit or explicit validation”:

“A standard peer review process' is defined as involving at least two expert reviewers who observe COPE guidelines and do not have a conflict of interest with the author(s). An implicit validation has occurred when the reviewers state the conditions that need to be fulfilled for the article to be validated. An explicit validation is made by an editor, an editorial committee, or community overseeing the review process.”

This cOAlition S precision makes it possible to indicate which peer-reviewed preprints have “equivalent merit and status as peer-reviewed publications that are published in a recognised journal or on a platform.”

2. Curation is not necessarily validation

Curation can be viewed as a positive classification, selection and (more or less) highlighting of reviewed articles. Curation is generally associated with positive qualitative selection: an article is selected for inclusion in a collection based on its – generally positive – qualities.

Curation is sometimes the consequence of an evaluation process resulting from peer review. It is either a form of validation (e.g. classic publication, public recommendation of preprint by PCI) or a form of highlighting articles that have already been validated (e.g. F1000prime then Faculty Opinion, blogs, news & views, recommendation of postprint by PCI, etc.).

In theory, however, curation can be carried out without prior or simultaneous validation (see Stern & O’Shea, 2019 and Corker et al., 2024). Curation may not always follow a peer-review process leading to validation or may not be automatically associated with a validation process.

This can cause a problem if the reader mistakenly thinks that such curated peer-reviewed preprints are validated preprints.

Binary validation as a solution

Unlike many supporters of peer-reviewed preprints and, more generally, critics of gate-keeping of the traditional publication system, at PCI, we advocate for the binary validation of peer-reviewed preprints, a clear accept/reject decision after peer review. Our view is that the curation phase of the PRC model would benefit from a positive editorial decision. This approach has an advantage: it sends a clear signal to the reader, confirming that part of the scientific community has evaluated and validated the article.

Unlike many supporters of peer-reviewed preprints, at PCI, we advocate for the binary validation of peer-reviewed preprints, a clear accept/reject decision after peer review. Share on XNote that not all scientific communities have the same acceptance/rejection criteria. Some communities have stricter, more selective scientific criteria than others. The minimum acceptable strength of evidence varies between scientific journals. These variations partly explain why the same study can be published in one journal but not in another, regardless of any arguments relating to the originality or impact of the study. For example, the journals in which it is possible to publish after a recommendation by PCI Registered Reports (PCI RR) have different “Minimum required level of bias control to protect against prior data observation”.

This variation in scientific stringency to obtain validation does not call gatekeeping into question but explicitly qualifies and nuances it. The criteria need only be objective and transparent, as with PCI RR-friendly journals. The diversity and heterogeneity of acceptance thresholds reflect a diversity of communities and validation bodies. In the classic publication ecosystem, this diversity is reflected by a diversity of scientific journals. Authors are more or less familiar with this diversity, and readers are partly familiar with it. In a Publish-Review-Curate ecosystem based on validation prior to or at the same time as curation, a diversity of thresholds can be expected or even desired to obtain validation and, therefore, curation.

Two models of PRC with validation

We believe that the Publish-Review-Curate model cannot stand by itself: it should incorporate a binary editorial decision, which should be made before or during curation. We therefore propose two forms of PRC, both of which incorporate a validation step based on peer reviews:

1. Publish-Review-Curate(=Validate): In this model, curation itself acts as validation based on peer reviews. This is what most scientific journals do. For example, a journal may publish a peer-reviewed preprint based on peer reviews produced by another service, as PCI-friendly journals or journals associated with Review Commons would do.

2. Publish-Review(=>Validate)-Curate: Here, peer reviews lead to validation before the curation step. Curation gives additional value to an article that has already received an (editorial) acceptance decision based on peer review. This is what F1000 used to do or what Nature (and other journals) do by publishing News & Views to highlight an already published article.

PRC in light of the criticism of traditional publication models

Finally, let’s reassess the criticisms of the traditional publication model—long, opaque, unfair, unqualified, monopolized, inefficient, and obsolete—in the context of a PRC model with binary validation:

- Length: Preprints are published before peer review and validation, eliminating the delays where articles remain hidden from readers during evaluation.

- Opacity: Reviews are publicly available, and validation decisions can also be transparent.

- Unfairness: Transparent, objective evaluation criteria remove concerns about fairness.

- Unqualified evaluators: Ensuring that editors and reviewers are competent addresses the criticism of unqualified evaluations.

- Monopolization: A larger pool of editors, as is the case with PCI’s thematic editors, ensures a diversity of perspectives.

- Inefficiency: While no system is immune to errors, the transparency of reviews and editorial assessments allows readers to identify potential flaws.

- Obsolescence: Time will tell whether we are right or wrong. However, there is an encouraging indicator: the rapid growth in the use of Registered Reports with a binary decision post peer-review is a positive signal concerning validation in a PRC system.

In sum, the PRC model, when combined with binary validation, offers a robust alternative to traditional publishing, addressing its key criticisms while retaining the benefits of peer review and curation.

International Open Access week and this year’s theme “Community over Commercialization” provide me with an excellent opportunity to reflect on the many actions that cOAlition S has been involved in over the last few years in the journey towards Diamond Open Access (OA).

Diamond OA is often defined as an equitable model of scholarly communication where authors and readers are not charged fees for publishing or reading. But it’s more than a business model. What truly sets it apart is its community-driven nature: scholarly communities own and control all content-related elements of scholarly publishing. Diamond OA thus engages the scholarly community in all aspects of the creation and ownership of content-related elements[1], from journal and platform titles, publications, reviews, preprints, decisions, data, and correspondence to reviewer databases[2]. This content-related perimeter and scholarly independence ensure that academic interests – not commercial ones – guide publishing decisions.

From the Diamond Study to the Action Plan

In 2020, when cOAlition S published a call for proposals on Diamond OA with support from Science Europe, we knew we were onto something important, but we couldn’t have predicted the massive support that would follow. The landmark Open Access Diamond Journals Study (OADJS) that resulted from the call in 2021[3] was a first major breakthrough. The study discovered a vibrant landscape of community-driven publishing initiatives that were nevertheless isolated, fragmented, and underfunded. This study in turn led to something even more important: it gave rise to the Action Plan for Diamond OA in 2022[4], an initiative of Science Europe, cOAlition S, OPERAS, and the French National Research Agency (ANR) to further develop and expand a sustainable, community-driven Diamond OA scholarly communication ecosystem. The Action Plan proposed to align and develop common resources for the entire Diamond OA ecosystem, including journals and platforms, while respecting the cultural, multilingual, and disciplinary diversity that constitutes its strength. Over 150 organisations endorsed the Action Plan, constituting a community for reflection and further action.

Building a sustainable future: DIAMAS and CRAFT-OA

In line with the Action Plan’s goals, Horizon Europe funded two strategic and complementary projects, with cOAlition S being a partner in both initiatives: the €3m DIAMAS project began mapping the institutional publishing in Europe, while the €5m CRAFT-OA project focused on activities to improve the technical and organisational infrastructure of Diamond OA. Together, they represent an €8m investment in reshaping scholarly communication.

As co-leader of the DIAMAS project, alongside Pierre Mounier from OPERAS, I am privileged to work together with 23 public service scholarly organisations from 12 European countries in raising academic publishing standards, and increasing institutional capacity for Diamond OA publishing while respecting the diverse needs of different disciplines, countries and languages in the European Research Area.

Similarly, the CRAFT-OA project, led by Margo Bargheer from the University of Göttingen library, with 23 partners from 14 European countries and in collaboration with EOSC, works to make the Open Access landscape more resilient by centralising expertise and creating a collaboration network.

The next big step: the European Diamond Capacity Hub (EDCH)

On the 15th of January 2025, we will reach another milestone with the launch of the European Diamond Capacity Hub (EDCH) in Madrid, at FECYT headquarters. With initial support from the French National Research Agency (ANR) and Centre national de la recherche scientifique (CNRS), the EDCH aims to strengthen the Diamond OA community in Europe by supporting European institutional, national and disciplinary capacity centres, Diamond publishers and service providers in their mission of Diamond OA scholarly publishing.

Integrating the results of the DIAMAS and CRAFT-OA projects, the EDCH will ensure synergies, align, and support Diamond OA Capacity Centers. Diamond OA Capacity Centers include Diamond publishers and service providers, who in turn provide publishing services to Diamond journals and preprint servers. The EDCH will help these Centers excel by providing technical services, quality alignment, training and skills, best practices and sustainability. In turn, the Capacity Centers will offer the essential infrastructure and expertise that journal, book, and preprint communities need to publish their work successfully under the Diamond OA model.

Growing global momentum and recognition of Diamond OA

The momentum around Diamond OA isn’t just European. In 2023, cOAlition S contributed actively to the First Global Diamond OA Summit in Toluca, Mexico. Together with ANR, Science Europe, and many other organisations, we launched the idea for a global network for Diamond OA. UNESCO embraced this idea and is now leading a worldwide consultation on its implementation. As we prepare for the Second Global Summit for Diamond OA in Cape Town, focusing on social justice in academic publishing, it is clear that Diamond OA has become a global movement.

All of these activities are taking place against a background of increasing recognition of Diamond OA as an alternative to publishing models that are inherently inequitable and increasingly unsustainable. The UNESCO recommendation on Open Science (2021), the G7 Science and Technology Ministers declaration, and the Council of the European Union conclusions (2023) all call for a scholarly publishing ecosystem that is high-quality, transparent, open, trustworthy and equitable – the very essence of Diamond OA. This policy emphasis is well-founded as facilitating and aligning Diamond OA will achieve a number of desirable goals in OA publishing. First, it ensures equity by not charging fees to authors or readers. It also allows researchers to take back control of scholarly content. Diamond OA also allows Research Funding Organisations (RFOs) and Research Performing Organisations (RPOs) to control publication costs, creating a sustainable alternative to Gold OA fees that are spiralling out of control. Finally, Diamond OA ensures diversity and multilingualism, since it publishes outputs in a variety of languages and epistemic traditions.[5]

[1] Gatti, R,, Rooryck, J., & Mounier, P. (to appear) Beyond “No fee”: why Diamond Open Access is much more than a business model. Ledizioni-LEDIPublishing

[2] See also Rooryck, J. (2023). Principles of Diamond Open Access Publishing: a draft proposal. The diamond papers. https://thd.hypotheses.org/35

[3] Becerril, A., Bosman, J., Bjørnshauge, L., Frantsvåg, J. E., Kramer, B., Langlais, P.-C., Mounier, P., Proudman, V., Redhead, C., & Torny, D. (2021). OA Diamond Journals Study. Part 2: Recommendations. Zenodo. https://doi.org/10.5281/zenodo.4562790

[4] Ancion, Z., Borrell-Damián, L., Mounier, P., Rooryck, J., & Saenen, B. (2022). Action Plan for Diamond Open Access. Zenodo. https://doi.org/10.5281/zenodo.6282403

[5] Pölönen, J. (2024) Diamond Open Access is fundamental for making multilingual scientific knowledge openly available, accessible and reusable for everyone. https://zenodo.org/records/11094709

Academic knowledge shouldn’t be hindered by economic disparities. However, many researchers today, particularly in developing countries, face significant barriers to participating in scholarly communication.

Traditional publishing models have often overlooked the vast economic differences between regions, creating an uneven playing field in scholarly communication. When researchers can’t afford to publish or access research, the entire scientific community loses valuable perspectives and contributions. On the contrary, models which are open and encourage participation in scholarly communication are more equitable than those which do not.

To address this challenge, Information Power, on behalf of cOAlition S, developed a new Equitable Pricing Framework to foster global equity in scholarly publishing.

Why a new pricing framework is needed?

Purchasing power varies significantly across the world, making standard pricing for products and services accessible in wealthier countries unaffordable in many others. Current pricing models in publishing fail to address these global disparities, as they do not take local purchasing power into account. This affects subscription costs, read-and-publish agreements, subscribe to open (S2O) agreements, collective funding models, article processing charges (APCs), and more.

While some publishers do offer discounts to some countries, there is no consistent or transparent method for determining appropriate discount levels. Often, the reasons behind these discounts are unclear, lost in outdated agreements or tied to specific or possibly arbitrary, decisions—such as a consortium’s negotiation strategy or a publisher’s attempt to expand into a new market. Whatever the origin, the process lacks transparency and equity.

Electronic Information for Libraries (EIFL) undertakes impactful work by negotiating deeply discounted agreements on behalf of its 33 member countries, but this initiative does not extend to all publishers or all developing nations. As a result, many countries still struggle to participate in scholarly communication due to unaffordable costs.

While some publishers offer waivers to authors in certain countries—typically those classified as Group A by Research4Life—this is not a universal solution. Many other countries, where the cost of APCs remains prohibitively high for researchers and institutions, are left out. There are many countries in the world that are wealthier than those classified in Group A but not wealthy enough to pay the prices paid by high-GDP countries, such as the USA, Australia or Norway.

Additionally, the practice of offering waivers can be problematic. It may be perceived as a form of charity, which risks being condescending and undermining the spirit of solidarity within the global research community.

Following a consultation with the funder, library/consortium, and publisher communities, this new pricing framework has been designed with the aim to promote greater transparency and inspire publishers and other service providers to implement more equitable pricing across different economies. This approach resembles successful models in other industries – similar to how the pharmaceutical industry has implemented tiered pricing based on countries’ capacity to pay, ensuring both equitable access and sustainable business models (Osman, F., & Rooryck, J. (2024). A fair pricing model for open access. Research Professional News).

The framework is adaptable, allowing publishers to implement changes gradually and in line with their specific circumstances. It can be applied to various pricing models, including article processing charges (APCs), subscriptions, and transformative agreements.

Key features of the framework include:

- Open, transparent data: utilizing World Bank International Comparison Program data, reflecting each country’s income and ability to pay.

- Banding: grouping countries into bands eases administration.

- Excel-based tool: allowing publishers to explore and set their own bands and differential prices using the same transparent data.

- Local currencies: raising invoices in local currencies where possible.

Where to learn more about the equitable pricing framework?

Do you want to learn more about how this framework can advance equity in scholarly publishing? Then join our webinar on the 16th of January 2025 between 17.00 and 18.00 CET. Our expert panel will include Miranda Bennett from California Digital Library, Franck Vazquez from Frontiers, César Rendón and César Pallares from Consorcio Colombia, Dave Jago and Alicia Wise from Information Power and Robert Kiley from cOAlition S.

To register your interest, please email info@informationpower.co.uk

*NEW* View the recording of the webinar on Youtube

You can also access the full report on the Pricing Framework to Foster Global Equity in Scholarly Publishing here https://zenodo.org/uploads/12784905, along with the More Equitable Pricing Tool and a set of frequently asked questions.

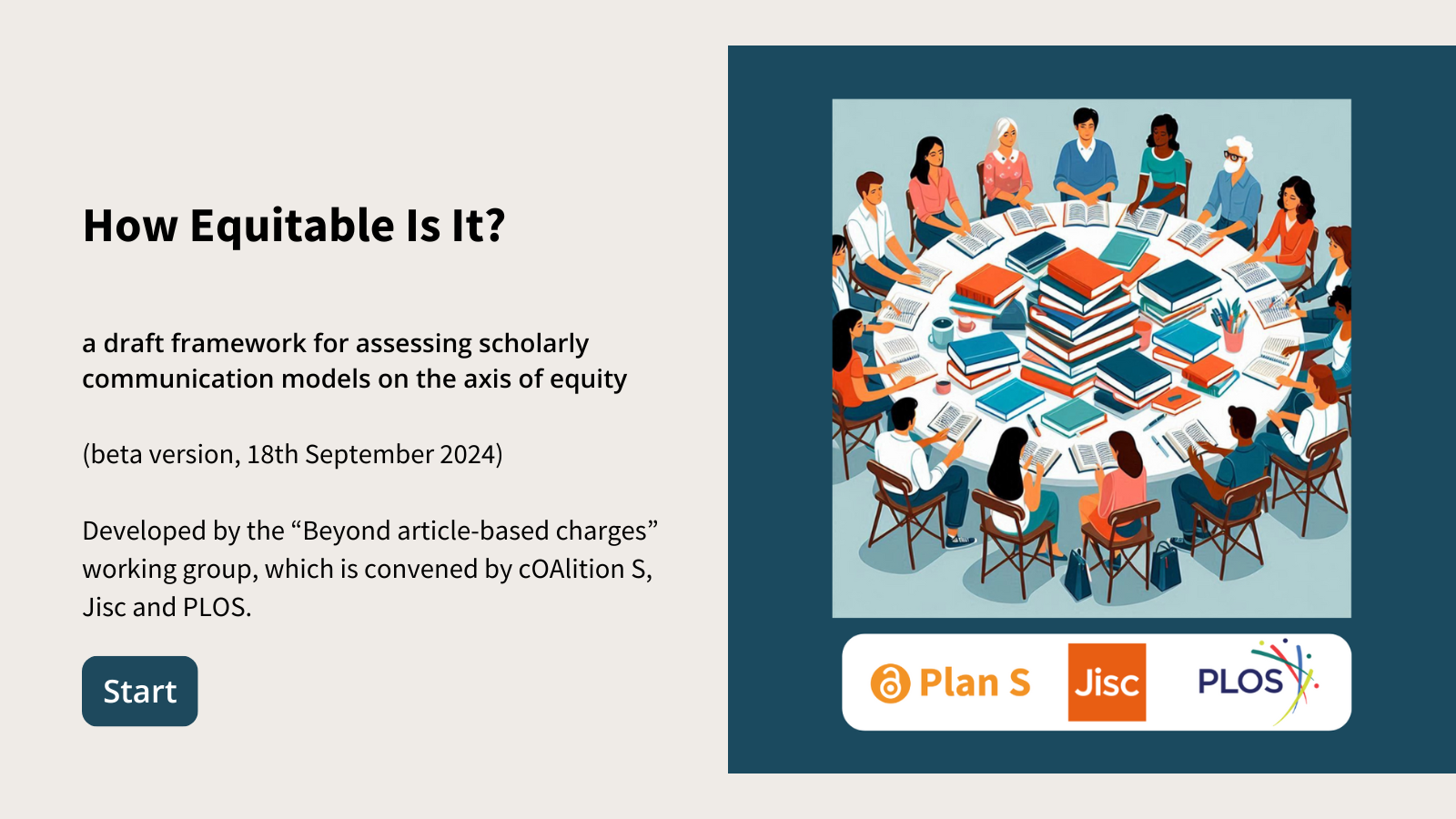

In 2023, PLOS was delighted to partner with cOAlition S and Jisc to establish a multi-stakeholder working group. Its goal was to identify business models and arrangements that moved away from article-based charges (i.e. APCs), enabling more equitable participation in knowledge-sharing.

In this blog post, we discuss the “How Equitable Is It” framework and how we, at PLOS, will use it as part of our efforts to support equitable participation in Open Science.

Background: the challenges of Open Access (OA) Publishing

We highlighted the challenges with article-based charges in the original Working Group call. The OA movement aimed to provide equitable access to research outputs, and the push to include the cost of publication in research budgets was intended to reduce the overall cost of access to published research.

Twenty years on, we see a number of unintended consequences for scholarly communication:

- APCs established as the predominant OA business model in those countries/regions/disciplines where this economic argument worked but also where they restricted participation for authors with limited funds.

- The rate of article growth leading to ever-increasing costs for funders and research-intensive institutions.

- The article embedded as the research output of value, blocking progress towards an Open Science ecosystem.

The “How Equitable Is it?” framework

So, where does the working group’s recently launched “How Equitable is it?” tool fit into all of this? For several years, we at PLOS have been working to make OA publishing more equitable by designing and implementing new non-APC business models, like Community Action Publishing and Global Equity. Both look to spread costs more equitably amongst those purchasing publishing services.

Community Action Publishing was designed to support our selective journals without the need for a high APC. Similarly, Global Equity does not work on the basis of a “per article” or “per unit” payment for publishing, while reflecting countries’ financial situations by relating to the World Bank Criteria. Both remove barriers to publishing for authors, while ensuring that everyone can read and reuse content (with proper attribution). Institutions in Research4Life (R4L) countries can participate in both models without charge.

While we’ve designed these models with equity in mind, the “How Equitable Is It?” Framework gives us the opportunity to demonstrate this to scholarly communication ecosystem stakeholders (funders, institutions, libraries, research communities) and to point the way forward for new equitable solutions.

Funders and research institutions can play a critical role in supporting the move to non-APC based business models. Viewed through an economic lens, funders and institutions–many of whom have equity in their mission statements–and librarians and consortia, who engage in collective negotiation on researchers’ behalf, can use this tool to assess publisher arrangements and models and steer investment or collaboration towards models that enable more equitable participation.

Importantly, the tool gives all scholarly communication stakeholders – publishers, funders, librarians, consortia and researchers – a common framework to discuss these aspects and together co-create more equitable solutions. Launching the framework as a beta version was purposeful to enable these stakeholders to give feedback and help steer its future iteration and evolution.

Supporting Open Science: future initiatives

Our work at PLOS in this area is not yet done. We are embarking on a new Research and Design project, with generous funder support, that will tackle two barriers that exclude many researchers from meaningfully participating in Open Science: the affordability of APCs and the lack of recognition for Open Science contributions beyond articles.

Our aim is to develop a new, integrated solution that enhances the visibility and discoverability of non-article research outputs, including data, code, and methods. Alongside this, grounded in principles of equity and price transparency, we will look to develop a sustainable, non-APC business model for all research outputs in collaboration with funders, libraries, and scientific institutions. The ”How Equitable is it” framework will be relevant to our design thinking, as we explore inclusive and sustainable solutions for the future of Open Science.

Just as the “HowOpenIsIt” guide, created in collaboration with SPARC and OASPA, gave researchers a framework to assess Open Access journals and guide their decisions about where they publish their research, we hope the “How Equitable Is It?” tool will enable funders, library consortia, and research communities to evaluate publisher models on the axis of equity and seek publishing solutions that directly align with their values. Please try it out and tell us what you think!

The current release of the “How Equitable Is It?” tool is a beta version, open for comments and improvement. Stakeholders in the academic publishing ecosystem are encouraged to test the tool and provide feedback by the 4th of November via the form https://coalitions.typeform.com/Equity-Feedback to help refine the criteria and increase its utility. The Working Group will review all input and publish a revised version in early 2025.

Emerald should be applauded for adopting a policy since 2014 that states authors may make their accepted manuscript (AAM) freely available at the date of Emerald’s publication – this is more liberal than many large publishers. As part of its Open Research policies, and in response to the widespread adoption of Institutional Rights Retention Policies (IRRPs) in the UK, Emerald has published a ‘Statement relating to rights retention strategies.’ The following critique examines the statement in detail.

The Emerald Green Open Access policy “has allowed authors to self-archive their Author Accepted Manuscript (AAM) under a CC-BY-NC licence, should they choose to do so. This allows authors and their institutions to fulfil any open mandates required by funders.”

- Ignoring for now the fact that it is the publisher, not the researcher, holding the power over the author’s distribution of their intellectual creation (Emerald ‘has allowed’), most funders prefer a CC-BY licence, not a CC-BY-NC licence. Emerald permits authors to use a CC-BY licence if a transformative Agreement is in place, or if an APC has been paid, but note that cOAlition S funders’ financial support for transformative agreements is due to cease after 2024.

“a CC-BY-NC licence…protects the rights of the author and publisher by preventing subsequent commercial use of the AAM.”

- I disagree. Emerald insisting on a CC-BY-NC licence for the AAM does not protect the rights of the author. If I understand correctly, owing to the transfer of copyright, it is Emerald that holds the right to license others, including the author, to make any further commercial use of the article. As the Green OA instructions state “If anyone wishes to use that AAM for commercial purposes, they should see Marketplace [The Copyright Clearance Centre’s ‘Marketplace is your source for copyright permissions, content, and reprints’].” The Non-Commercial element of the licence is therefore there to protect the rights and commercial interests of the publisher. I’m happy to be corrected if this is wrong.

“a number of UK universities have implemented a ‘Rights Retention Strategy’ which compels authors to deposit AAMs in their institutional repository.”

- The use of the word ‘compels’ implies that researchers are forced to deposit their works. On the contrary, institutional RR policies are generally adopted both in support of and with the support of their academic staff.

“this [IRRP] approach undermines the rights of authors who lose control over where or how their work is subsequently published or otherwise commercially exploited.”

- As noted above, institutional RR policies are generally adopted both in support of and with the support of their academic staff.

- Assuming the main complaint of Emerald is the CC-BY licence, where any work released under a CC-BY license – the standard OA publishing licence – be it publisher’s version or AAM, can be re-published and commercially exploited. There are millions of articles published under this licence. “CC BY articles in fully-OA journals are by far the dominant type of articles published by OASPA members… CC BY remains dominant in hybrid journals too.” (OASPA blog). This therefore appears to be a broader argument against CC BY generally.

- If I am correct above, Emerald authors have already lost control over “how their work is…commercially exploited”…… to Emerald.

- By submitting to Emerald’s conditions for Green OA which include a number of restrictions, authors lose control over how they can use and disseminate their own work.

“this approach is a threat to the integrity of the research published. AAMs could be disseminated widely with no ability for publishers to correct or retract the work and no obligation for those subsequently publishing the work to comply with COPE guidelines.”

- The same could be argued for any work published under a CC-BY licence – see above. The same is also true about corrections or retractions for works disseminated under the current CC-BY-NC licence.

“the legal basis under which a University can claim to ‘retain’ rights to an AAM is flawed.”

- Universities do not typically retain rights. The university as an employer typically waives its copyright in articles. With an IRRP, the author grants the university a licence to make the work OA under a CC BY licence. Some universities have opted for policy titles such as ‘Copyright and Publications Policy’ rather than Rights Retention policy to clarify this point. See for example the policy at the University of Edinburgh: “Upon acceptance of publication each staff member with a responsibility for research agrees to grant the University of Edinburgh a non‐exclusive, irrevocable, worldwide licence to make manuscripts of their scholarly articles publicly available under the terms of a Creative Commons Attribution (CC BY) licence, or a more permissive licence.”

- I would welcome an explanation from Emerald as to how, in their view, the legal basis of UK universities’ policies is flawed.

“This approach ignores the significant value and investment that publishers make in the peer review process and in their journal brands.”

- IRRPs do not ignore the value of the publisher. Researchers, universities and funders are all aware of the value that publishers can add, and the investment they make to create their products and run their services. For articles published in subscription journals, the publisher continues to receive payment for the work, even if the AAM is made freely available. This argument conflates the publishing service with the original rights of the author to their own intellectual content. In my opinion, the two should be treated as separate entities. The publisher can continue to take advantage of the content given to them at no charge by the author to promote their brand, and thereby make a return on their investment. Let’s not forget either that the peer review process relies on the (generally) unremunerated input of scholars. The unremunerated input from academia is considerable, and not an “investment that publishers make in the peer review process”, but rather an unremunerated intellectual investment by scholars themselves.

“The intellectual property [IP] rights in an AAM are a combination of the work of the author and that of the publisher.”

- My understanding is that the IP rights in the content of the AAM are legally the result of creation by the author and original copyright holder who wrote the work, and then made any changes following peer review. The publisher has not made any intellectual input in creating the content at this point.

“Whilst in some cases the university may own author copyright under an employment contract, it cannot unilaterally ‘retain’ the rights of the publisher or co-authors who may not be employees.”

- As mentioned above, the university does not retain the rights of the publisher, nor usually the author, or co-authors. The university is granted a licence to make a copy available. Co-authors will have agreed to the dissemination beforehand – in the same way, they do when the corresponding author signs the publisher’s licence to publish on behalf of all authors.

The Emerald statement does not categorically state that articles backed by IRRPs and submitted for consideration for publication will be rejected. The company says that it remains ‘open to consider any equitable approach that increases Open Access routes for our authors.’ This suggests that articles will not be rejected at submission, or later, on the grounds of prior licences such as IRRPs. Clarity on this matter would be welcome.

The Emerald statement relating to rights retention strategies appears to show some misunderstandings as to how UK IRRPs operate (for example, that the author licences the university – the university doesn’t retain rights). On a broader note, in my opinion, there is a fundamental problem with the statement – like many publishers’ sharing policies: it is based on the premise that it is the publisher who dictates both where and how the author can disseminate their intellectual creation. That is, the publisher imposes restrictions on use by the author. Herein lies the problem. As I continue to reiterate, the boot is on the wrong foot. The author’s own dissemination of their research findings is for the researcher to decide, not an external 3rd party service provider who has had no input to the research process. Emerald’s green OA policy, although more liberal than most, is basically a set of restrictions on the use of the author’s content by the author. For example, AAMs can only be disseminated “On scholarly collaboration networks (SCNs) that have signed up to the STM article sharing principles” and ‘We do not currently allow uploading of the AAM to ResearchGate or Academia.edu.’ Such restrictions are precisely the reason why increasing numbers of UK institutions are adopting RR policies.

With the advancement of open science, the reliability of open bibliometric data providers compared with proprietary providers is becoming a topic of increasing importance. Proprietary providers such as Scopus, SciVal, and Web of Science have been criticised for their profit-oriented nature, their opaqueness, and lack of inclusiveness, notably of authors and works from the Global South.

At the same time, non-commercial open science infrastructures and open-source software and standards are increasingly being recommended (cf UNESCO Recommendation on Open Science, EU Council conclusions of May 2023). Some institutions, such as CNRS, Sorbonne University and CWTS Leiden have started transitioning from proprietary data sources to open ones.

The question often asked is: how do the open bibliometric databases compare with their well-established commercial counterparts? To contribute to this debate we share our recent experience at cOAlition S.

Last year we tasked scidecode science consulting to carry out a study on the impact of Plan S on scholarly communication, a study which is funded by the European Union via the OA-Advance project. We required that the results of work have to be disseminated with an open licence, including the data. Therefore our partners at scidecode had to make sure that the bibliometric data they used in their impact study was open and also good enough to carry out the needed analyses.

To assess this, scidecode science consulting conducted an interesting experiment with the aim to prove that the quality of bibliometric references from open metadata sources is at least as good, if not better, as that provided by commercial entities. For this, scidecode selected a deliberately challenging benchmark: a set of publications from Fiocruz, a funder from the Global South with many non-English publications and many lacking DOIs.They then compared the coverage provided by OA.Works, a non-profit data provider using open bibliometric sources such as OpenAlex, Crossref, Unpaywall, etc., with the coverage provided by a commercial database.

The authors concluded that the resulting data based on open bibliometric sources was more comprehensive and of better quality than the data based on sources provided by the commercial provider. In addition, the use of open source data allows scidecode to comply with the requirement set by cOAlition S to openly licence their results, which would not necessarily be the case if they had used a commercial provider.

Read the full details and results of the study on the scidecode website.